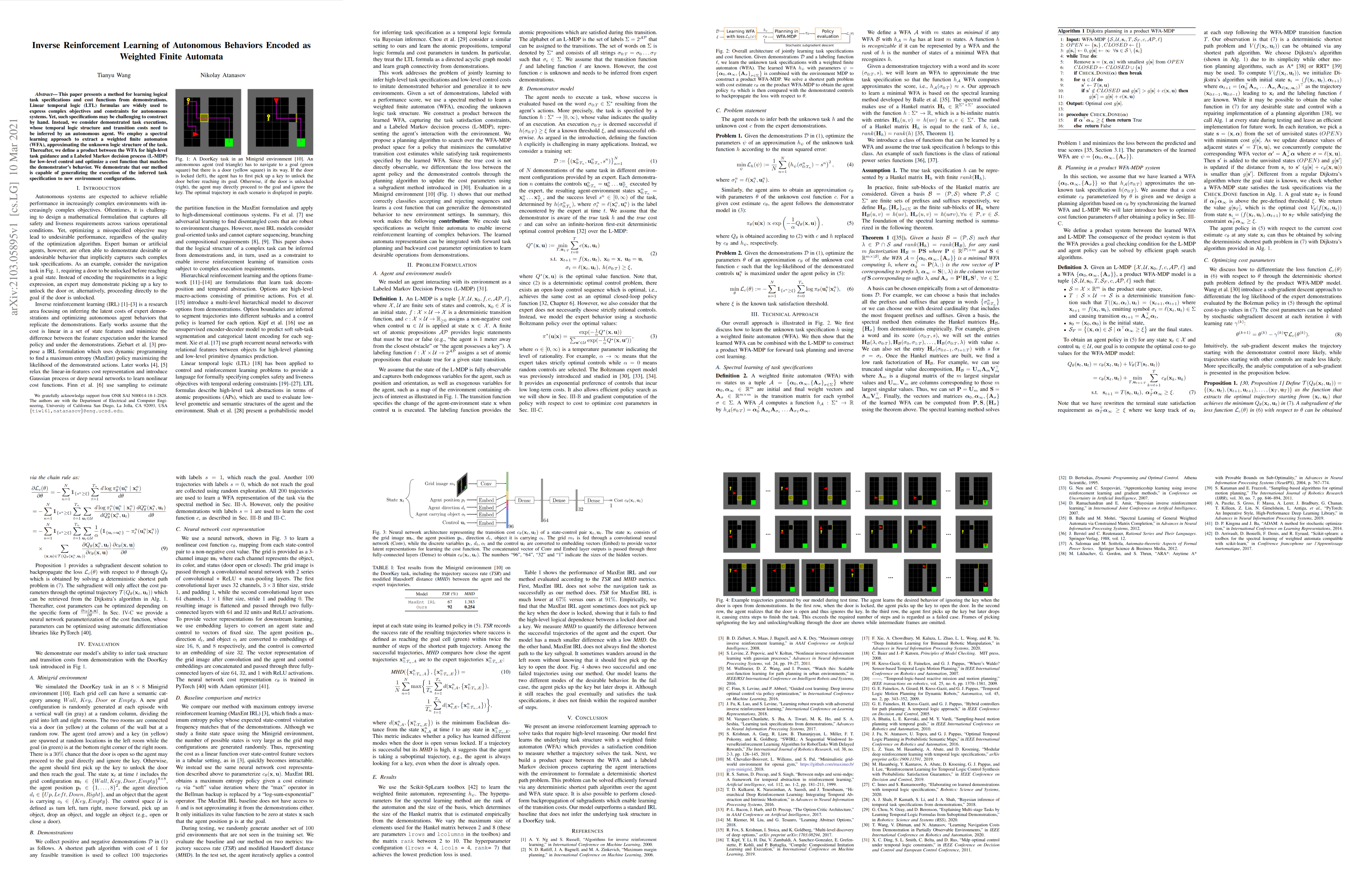

This paper presents a method for learning logical task specifications and cost functions from demonstrations. Linear temporal logic (LTL) formulas are widely used to express complex objectives and constraints for autonomous systems. Yet, such specifications may be challenging to construct by hand. Instead, we consider demonstrated task executions, whose temporal logic structure and transition costs need to be inferred by an autonomous agent. We employ a spectral learning approach to extract a weighted finite automaton (WFA), approximating the unknown logic structure of the task. Thereafter, we define a product between the WFA for high-level task guidance and a Labeled Markov decision process (L-MDP) for low-level control and optimize a cost function that matches the demonstrator's behavior. We demonstrate that our method is capable of generalizing the execution of the inferred task specification to new environment configurations.

Bibtex

Acknowledgements

We gratefully acknowledge support from NSF CRII IIS-1755568 and ONR SAI N00014-18-1-2828. This webpage template was borrowed from SIREN.